The Surveillance Pipeline – AI Is Watching You

Computer vision is revolutionizing AI — but beneath its promise lies a surveillance infrastructure quietly built by top labs and tech giants. New research reveals how visual AI is systematically eroding privacy and reshaping society under the guise of innovation.

Among the plethora of applications Artificial Intelligence (AI) brings with it – from healthcare to self-driving cars – computer vision often stands as a champion holding the torch to the future. We want to believe it, it makes us feel good: but it has a darker side – a story of unchecked innovation possibly besmirching social good.

An unsettling empirical history that has torn the veil off a much less innocent truth has been unearthed by researchers recently cited in Nature magazine: the profound, ubiquitous, and purposeful operating nature of intersections between groundbreaking computer-vision research studies and the not so gentle progress in mass surveillance.

In order to understand how weighty this is, it is necessary first to realize what computer vision is. It comes down to visual AI that measures, records, represents and analyses the world based on visual data – images and video. In historical usage, its origins were admittedly warlike, as used to identify targets, intelligence gathering and armed and police work.

However, the magnitude of usage has changed drastically – it is clearly no longer a niche. According to new research based on over 20,000 papers, a large swath from a large computer vision workshop, the Conference on Computer Vision and Pattern Recognition (CVPR), the stark picture of “surveillance AI pipeline” – as the authors call it – comes into view.

This is not a gradual shift – the number of computer-vision papers associated with downstream surveillance-enabling patents have risen fivefold compared to the 1990s and the 2010s. Consider it analogous to a minor brook of academic interest that after twenty years of development has, between becoming a raging torrent of observation, been then directly drained into the capacities of surveillance. It is now a fieldwide norm, the systemic normalization of the erosion of human privacy, deeply rooted in the realm of the discipline at large.

It also indicates that, perhaps unwittingly, the intellectual powerhouse of computer vision has become, in one form or another, one of the main constructors of an algorithmic panopticon.

The ‘Data’-fication of Humanity

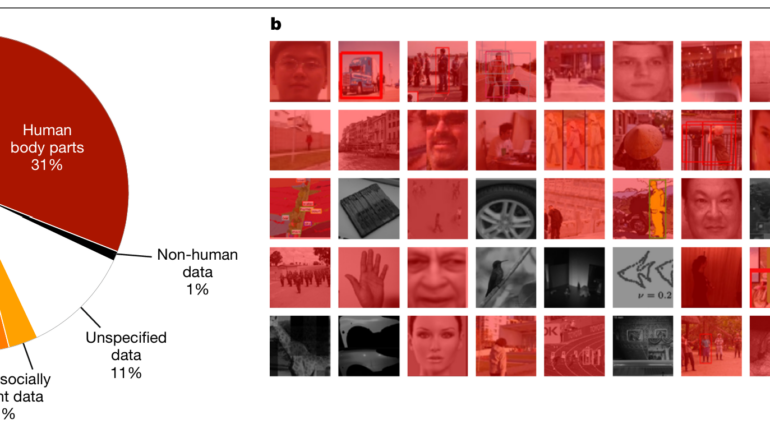

Just what is being surveilled? The study leaves little doubt that the majority of these papers and patents allow for the systemic targeting of humans. We are seeing an unprecedented level of granularity – capturing and analysing such things as human faces, expressions, gaits, and even actions, such as walking down the street or passing through a shopping zone. About 71% of the analyzed papers and 65% of the patents expressly recovered information regarding human bodies and parts, whereas only a minimal part of 1% exclusively retrieved non-humans. This ‘datafication’ is systematic, encompassing not only our physical stature, but also the spaces that we inhabit (read: our homes, offices, streets), as well as other socially-salient data – our identities, preferences or economic statuses.

Image: Human data extraction in computer-vision papers and downstream patents. Source: Nature (2025)

The implications of such extensive data collection are sweeping as it often grossly violates underlying freedoms such as the right to privacy and the right to the freedom of expression. In addition, such technologies often run without permission, and data is backed up forever and combined. Such obsessive accumulation leads to what American scholar Shoshana Zuboff continues to describe as the condition of no exit – to the extent that the possibility of simply leaving the network disappears to virtually zero.

The least benevolent perhaps is the so-called obfuscating language used in the field. There are constant human subsumptions into the generic, apparently innocuous term, objects. Suppose, as it were, a magician showing you a trick: you are dazzled with the thought of looking at objects, of detecting objects, or analyzing a scene, when all that you should really be watching is the trick being performed before your eyes: a close dissection of humanity.

An article may explicitly state that it intends to optimize the process of object classification but a deeper look at its figures would reveal it is categorizing such classes as ‘person’, ‘people’, or a ‘person sitting’. This verbal magic trick permits this sort of research to proceed with the fig leaf of application-agnostic neutrality, despite it explicitly developing human-surveillance tools.