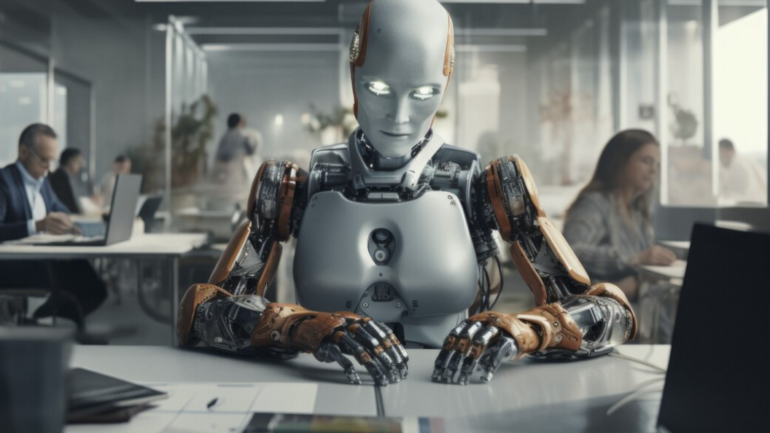

New AI Technique Allows Robots to Generate Their Own Expressive Behaviours

Integrating large language models into robotic systems could greatly expand how adaptable, useful and perhaps even caring machines can become in the future

People employ expressive behaviours to effectively communicate and coordinate their actions with others, such as nodding to acknowledge a person glancing at them or saying “excuse me” to pass people in a busy corridor. We would like robots to also demonstrate expressive behaviours in human-robot interaction. Prior work proposes rule-based methods that struggle to scale to new communication modalities or social situations, while data-driven methods require specialised datasets for each social situation the robot is used in.

A team of researchers from the University of Toronto, Google DeepMind and Hoku Labs has developed a new technique that allows robots to dynamically generate expressive behaviours suited to different social situations, without the need for specialised training or manual programming. Called Generative Expressive Motion (GenEM), the method taps into the reasoning and language capabilities of large language models (LLMs) to contextually adapt a robot’s motions and actions.

The main premise of the new technique is to use the rich knowledge embedded in LLMs to dynamically generate expressive behaviour without the need for training machine learning models or creating a long list of rules. For example, LLMs can tell you that it is polite to make eye contact when greeting someone or to nod to acknowledge their presence or command.

The Traditional Challenge of Robot Expressiveness

Expressiveness refers to a robot’s ability to convey internal states and intents through physical behaviours. This can include facial expressions, body language, motions, sounds, and lights. Traditionally, engineers have used rule-based systems where behaviours are manually coded according to different scenarios. However, these systems are inflexible to new situations or preferences. More recent data-driven approaches overcome some limitations but still require collection of specialised datasets for training machine learning models.

The Breakthrough Idea Behind GenEM

GenEM is based on the key insight that the knowledge already embedded in LLMs can be utilised to dynamically reason about social contexts and map desired behaviours to a robot’s capabilities. For example, LLMs can deduce that maintaining eye contact when greeting someone is polite without needing additional training.

Overview of the GenEM Pipeline

The GenEM pipeline starts with a natural language instruction describing a behaviour or social situation. The first LLM then determines an appropriate human response. Another LLM translates this into step-by-step motions suited to the robot’s functions. Finally, an API-mapping LLM converts procedures into executable API calls. Optional human feedback can further refine behaviours.

Key Benefits of the GenEM Approach

Researchers highlight several advantages of GenEM over existing methods:

- It can generate multimodal expressive behaviours utilising a robot’s full range of capabilities.

- The system adapts in real-time to human feedback and compositions of behaviours.

- As a prompt-based approach, GenEM does not require model retraining or dataset collection when applied to new robots. It only needs adjustment for their affordances and APIs.

- It composes complex behaviours from simple building blocks through LLM reasoning.

- The modular architecture is more effective than having a single LLM directly translate instructions.

Testing and Validating the GenEM Framework

The researchers evaluated GenEM by comparing its generated behaviours to scripted ones from a professional animator. They found the understandability was on par between both sets. Critically, GenEM achieved this without any prior data while being far quicker. Participants also responded positively to GenEM’s ability to take in and adapt to feedback.

While promising, there remain opportunities to build on GenEM’s foundations in areas like multi-interaction scenarios and richer action spaces. Nonetheless, early results highlight the usefulness of LLMs for flexible and creative expressiveness.

The Future Possibilities of LLM-Driven Robotics

The researchers posit that GenEM demonstrates a proof of concept for wider applications of LLMs in robotics.

This approach presents a flexible framework for generating adaptable and composable expressive motion through the power of large language models. By encoding complex reasoning abilities, LLMs may circumvent the need for manually-engineered behaviours or specialised training for robots to exhibit intuitive social capabilities. As language models continue to progress in sophistication, integrating them into robotic systems could greatly expand how adaptable, useful and perhaps even caring machines can become in the future.